The $670K Shadow AI Problem Your Board Doesn't Know About

Your board asked the question last quarter: “What’s our AI Strategy?” You presented initiatives: productivity tools, customer service automations, and data analytics upgrades. They nodded approvingly. Strategy approved, operational excellence and growth guaranteed!

But here’s what nobody asked: “What’s our AI governance strategy?”

That is an expensive silence. IBM’s 2025 Cost of a Data Breach Report reveals that 63% of organizations lack formal AI governance policies, while 97% of AI-related breaches stem from inadequate AI access controls. Organizations with high levels of shadow AI, employees using unauthorized AI tools, each breach carried an added cost of $670,000.

Do the math: three incidents and you’ve exhausted your annual security budget. Five incidents and you’re explaining to the board why the innovative AI initiative became a liability crisis.

This isn’t theoretical. It’s happening right now at companies similar to yours. And the CTOs who establish robust governance frameworks today will operate with confidence while their competitors scramble through reactive crisis management tomorrow.

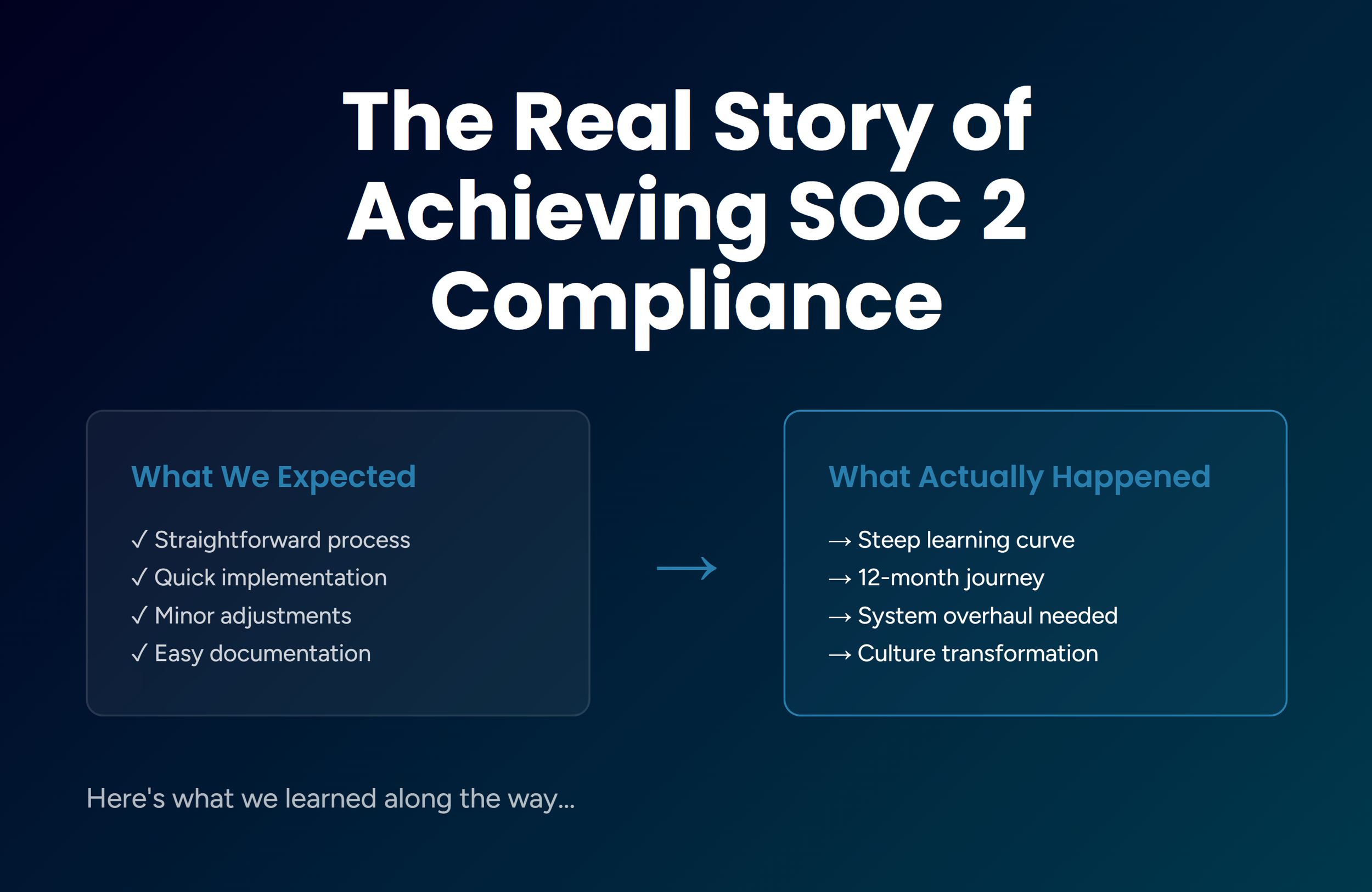

The Gap Between AI Adoption and AI Control

Here’s what’s actually happening in most organisations, including yours, right now:

Your marketing team is feeding customer data into ChatGPT to draft personalized emails, uploading proprietary information to a public AI platform.

Your engineering team is using gitHub Copilot to accelerate development, potentially exposing code that contains IP, API Keys, or architectural vulnerabilities.

Your finance team is experimenting with AI-powered forecasting tools, processing sensitive financial data through unvetted third-party models.

None of this is malicious. Your teams are just trying to work faster and smarter. But without governance, every productivity gain introduces hidden risk: data exposure, IP loss, regulatory violations, and attack surfaces you didn’t know existed.

The organizations that win the AI era won’t be those who adopted fastest, they’ll be those who governed smartest.

The Three Pillars of AI Resilience

In our extensive work with San Francisco Bay Area technology leaders, we’ve identified three interdependent pillars that separate resilient AI operations from reactive crisis management:

1. Governance: Who Decides and Who's Accountable

AI governance isn’t just a compliance checkbox. It’s the organizational framework that determines who can deploy AI, under what conditions, and who is answerable when something goes wrong.

What this looks like in practice:

Executive ownership: Designate a single executive (CTO, CIO, or CISO) accountable for AI risk; not a committee, not a working group, but one person who reports to the board.

Clear policies: Document what AI tools are sanctioned, what data can be processed, and what approval workflows are required before deployment.

Continuous monitoring: Implement tools like IBM watsonx.governance or equivalent platforms to track model lifecycles, data flows, and usage patterns in real-time.

Alignment with standards: Build your framework around recognized standards, such as NIST AI Risk Management Framework, OECD AI Principles, or EU Ethics Guidelines for Trustworthy AI.

According to McKinsey, companies with CEO-level oversight in AI governance report measurably stronger outcomes compared to their peers. On the other hand, companies without it discover liability only after the breach, when remediation costs 5-10x more than prevention.

2. Operations: How You Protect Your Systems and Data

While governance tells you what to do, operations is how you actually do it. This is where theory meets infrastructure, and where most organizations discover their vulnerabilities.

a. Adversarial AI Defense

Attackers now use generative AI to automate phishing campaigns, create deepfake impersonations, and social engineering attacks. Recent data shows that 16% of all breaches involve AI-driven strategies, with the banking industry predicted to suffer AI-enabled fraud losses exceeding $40 billion by 2027.

Your defense strategy must include:

Input validation and guardrails:Filter and sanitize all data entering AI models to prevent prompt injection attacks.

Model vulnerability scanning: Test your AI systems the way you test applications, with adversarial scenarios that simulate “your AI turned against you”.

Security operation integration: Ensure your SOC, incident response plans, and endpoint detection tools account for AI-specific threats, not just traditional network traffic.

b. Data Provenance and Privacy Controls

Training AI models requires massive data ingestion, and that’s where exposure occurs. When 27% of organizations report that over 30% of their AI-processed data includes private information, you’re not just risking compliance violations; you’re risking your IP, customer trust, and competitive position.

Your data strategy must include:

Data lineage tracking: Document where data originates, how it’s used, who accesses it, and where it’s stored throughout its lifecycle.

Shadow AI monitoring: Deploy tools that detect unauthorized AI usage, flag risky uploads, and provide secure sanctioned alternatives.

Digital rights management: Lock down proprietary information with verifiable content credentials and access controls that persist even when data is copied or shared.

c. Expandability and Bias Testing

Business leaders won’t adopt mission-critical AI when they can’t validate outcomes, justify decisions, or explain the logic to regulators or customers. Yet many AI systems operate as black boxes, producing results without rationale.

Your trust framework must include:

Explainable AI (XAI) pipelines: Use tools like LIME or SHAP to extract decision rationale from complex models.

Continuous bias testing: Institute fairness testing and human-in-the-loop review for high-impact decisions, such as hiring, credit approval, and medical diagnosis.

Audit-ready documentation: Maintain model cards, training data provenance, and decision logs that can withstand regulatory scrutiny.

3. Strategy: Where You're Going and How You’ll Get There

Governance and operations keep you secure today. Strategy ensures you stay competitive tomorrow.

Infrastructure planning: AI model training can strain energy budgets and data center capacity. Review your infrastructure with a cost-benefit lens, consider hybrid cloud architecture, modular frameworks, and sustainable compute strategies that scale without breaking your budget.

Vendor risk management: Locking into a single AI vendor or infrastructure stack creates obsolescence risk. Vet contracts for flexibility, interoperability, and exit rights. Maintain optionality.

Capability building: Your team may lack deep generative AI or data science expertise, and the talent market is tight. Invest in reskilling programs, build human-machine collaboration workflows, and consider augmenting internal teams with fractional CIO/CISO/CTO partnerships for strategic guidance.

What Good Governance Looks Like: Real-World Scenario

Consider two fintech companies launching LLM-powered customer service assistants in Q1 2025.

Company A moved fast. Engineers uploaded customer financial data to a public AI sandbox to accelerate training. No governance framework. No model access controls. No data provenance architecture.

The result: Within three months, a prompt injection attack exposed 15,000 customer financial profiles. Total cost: $5.52 million (breach remediation, regulatory fines, customer churn, reputational damage).

Company B moved strategically. They implemented IBM watsonx.governance, conducted adversarial testing before launch, restricted model access to approved personnel, and established executive-level accountability through their CTO.

The result: When they experienced a contained security event, the total cost was $3.62 million, and the time to containment was 70% faster than the industry average.

Your 90-Day AI Governance Roadmap

You don’t need to solve everything at once. What you can do is to start with the highest-leverage actions that reduce risk while enabling innovation.

Week 1-2:

Conduct an AI risk inventory: Document every AI model, tool, and platform currently in use, including shadow AI.

Map data flows: Identify what data is being processed by AI systems, where it originates, and who has access.

Assess current gaps: Evaluate your current governance policies, access controls, and monitoring capabilities against the NIST AI Risk Management Framework.

Week 3-4:

Assign executive responsibility: Designate a single executive owner for AI governance (ideally CTO, CISO, or equivalent).

Draft initial policies: Create clear documentation for sanctioned AI tools, data processing rules, and approval workflows.

Form a cross-functional governance team: Include representatives from security, legal, compliance, data science, and business units.

Week 5-8:

Deploy a governance platform: Pilot tools like watsonx.governance or comparable solutions to track model usage and compliance.

Set up access controls: Implement role-based permissions, data classification schemes, and usage logging.

Provide sanctioned alternatives: Give teams approved AI tools to reduce shadow AI adoption (e.g., enterprise ChatGPT licenses with data controls).

Week 9-12:

Conduct adversarial testing: Simulate prompt injection, data leakage, and model manipulation scenarios.

Refine policies based on findings: Update documentation with lessons learned and edge cases discovered.

Present framework to board: Deliver a risk dashboard showing current governance status, identified vulnerabilities, and mitigation progress.

Ongoing: Monitor, Audit, and Evolve

Quarterly governance reviews: Assess policy effectiveness, emerging threats, and technology changes.

Annual third-party audits: Engage external experts to validate your framework against industry standards.

Continuous learning: Stay current on regulatory developments, attack patterns, and governance best practices.

The Cost of Waiting

The average data breach now costs $4.44 million globally and $10.22 million in the United States. Shadow AI exposure adds another $670,000 per incident. These aren't abstract numbers, they're budget line items, board explanations, and competitive disadvantages.

But the real cost isn't just financial. It's strategic.

Every day without governance is a day your competitors establish AI capabilities you'll struggle to match. Every uncontrolled AI deployment is technical debt that compounds with interest. Every shadow AI tool is a vulnerability waiting to be exploited.

The window to establish governance leadership is now, before regulatory frameworks tighten, before breach costs double, and before your competitors build the operational advantages you'll spend years trying to catch.

What’s Next

The enterprises that emerge as AI leaders in 2026 won't be those who adopted fastest, they'll be those who governed smartest. The foundation you build today determines whether AI becomes your competitive advantage or your crisis management problem.

The cost of waiting isn't just financial, it's strategic. The time to act is now.

Need help implementing an AI governance framework for your organization? Our team has guided 100s of Bay Area CTOs through governance implementation, testing, and strategic planning. Schedule a free consultation to discuss your specific situation.