Safe AI Use and Security Guidance Employees Actually Need

No matter the organizational stance or policy requirements, your best employees are already using AI. The question you need to focus on is whether they're using it safely.

A recent survey found that 63% of organizations lack formal AI governance frameworks, yet employees across departments are feeding customer data into ChatGPT, running proprietary code through GitHub Copilot, and processing sensitive financials through unvetted AI tools. None of it is malicious, but all of it is risky.

The solution isn't banning AI (your competitors will thank you for that). It's establishing clear guardrails that protect your organization while enabling the productivity gains AI delivers.

Here's what a practical AI acceptable use policy actually looks like, and why your employees need one now.

Why AI Policies Matter More Than You Think

Think of an AI acceptable use policy as a corporate seatbelt. It doesn't stop your organization from moving fast and innovating. It simply ensures that if something goes wrong, you're protected from being launched through the windshield.

An effective policy serves three critical functions:

Governance establishes who owns AI decisions and where access boundaries exist. Without clear ownership, AI sprawl creates coordination chaos across departments.

Risk management reduces exposure to privacy violations, compliance failures, and data breaches. The average AI-related security incident now costs organizations $670,000, a number that should focus any executive's attention.

Enablement provides a framework for productivity. When employees know what's approved and what's off-limits, they can confidently use AI without second-guessing every decision.

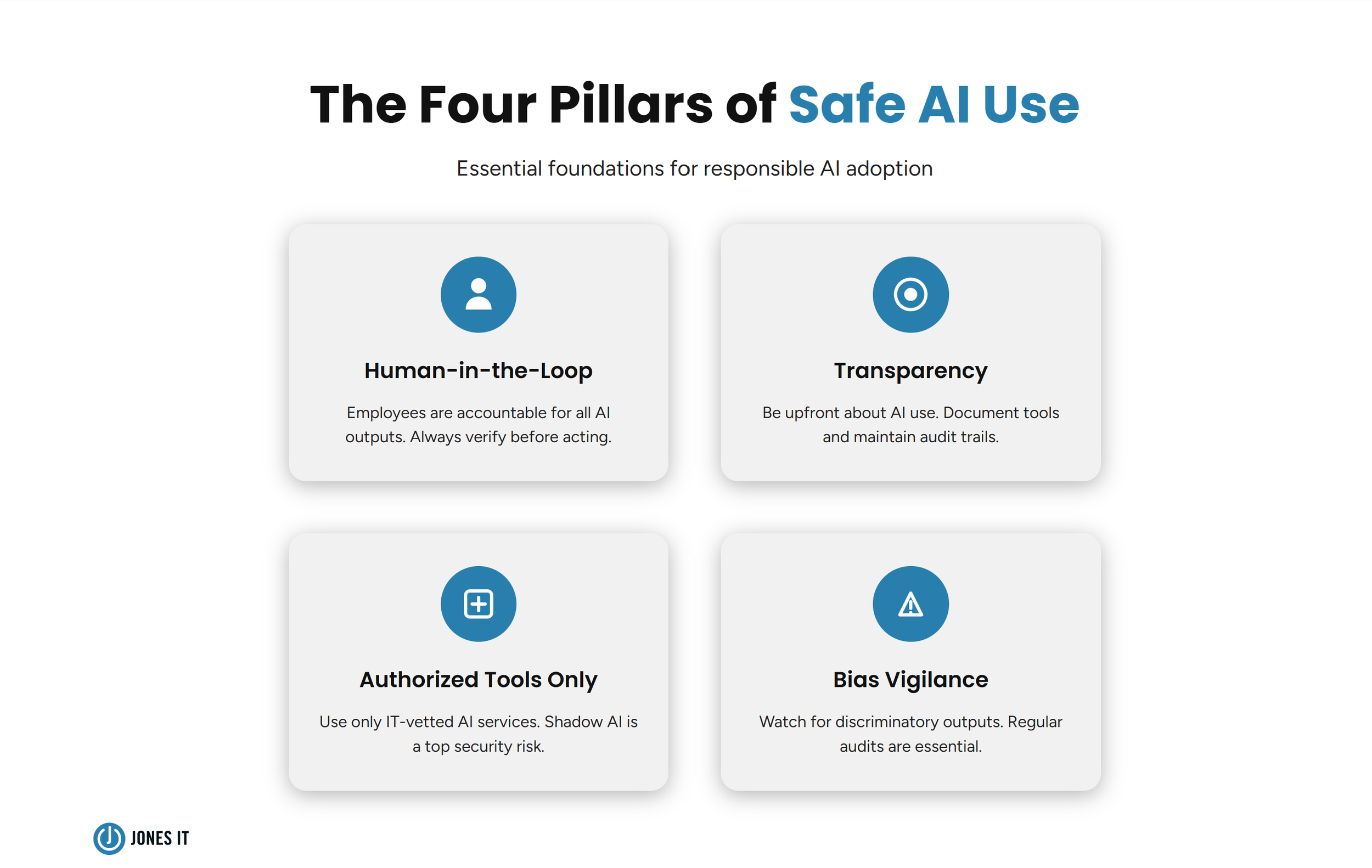

The Four Pillars of Safe AI Use

1. Human-in-the-Loop

This is non-negotiable: employees are ultimately accountable for all AI-generated outputs.

A competent human must always monitor AI tasks, verify the accuracy of results, and exercise independent judgment before making final decisions. AI can draft the contract, analyze the data, or generate the report, but a human reviews, validates, and approves.

This isn't about distrust of technology. It's about recognizing that AI systems can hallucinate, inherit biases from training data, or simply misunderstand context. The human in the loop catches these failures before they become costly mistakes.

2. Transparency

Employees must be upfront about the use of AI in their work. This builds trust internally and externally while creating accountability trails.

What does transparency look like in practice?

Disclosing when AI assisted in content creation,

Documenting which AI tools processed what data,

Being honest with clients about AI involvement in deliverables, and

Maintaining audit trails for AI-assisted decisions.

Organizations that practice transparency avoid the credibility damage that comes from AI use being discovered rather than disclosed.

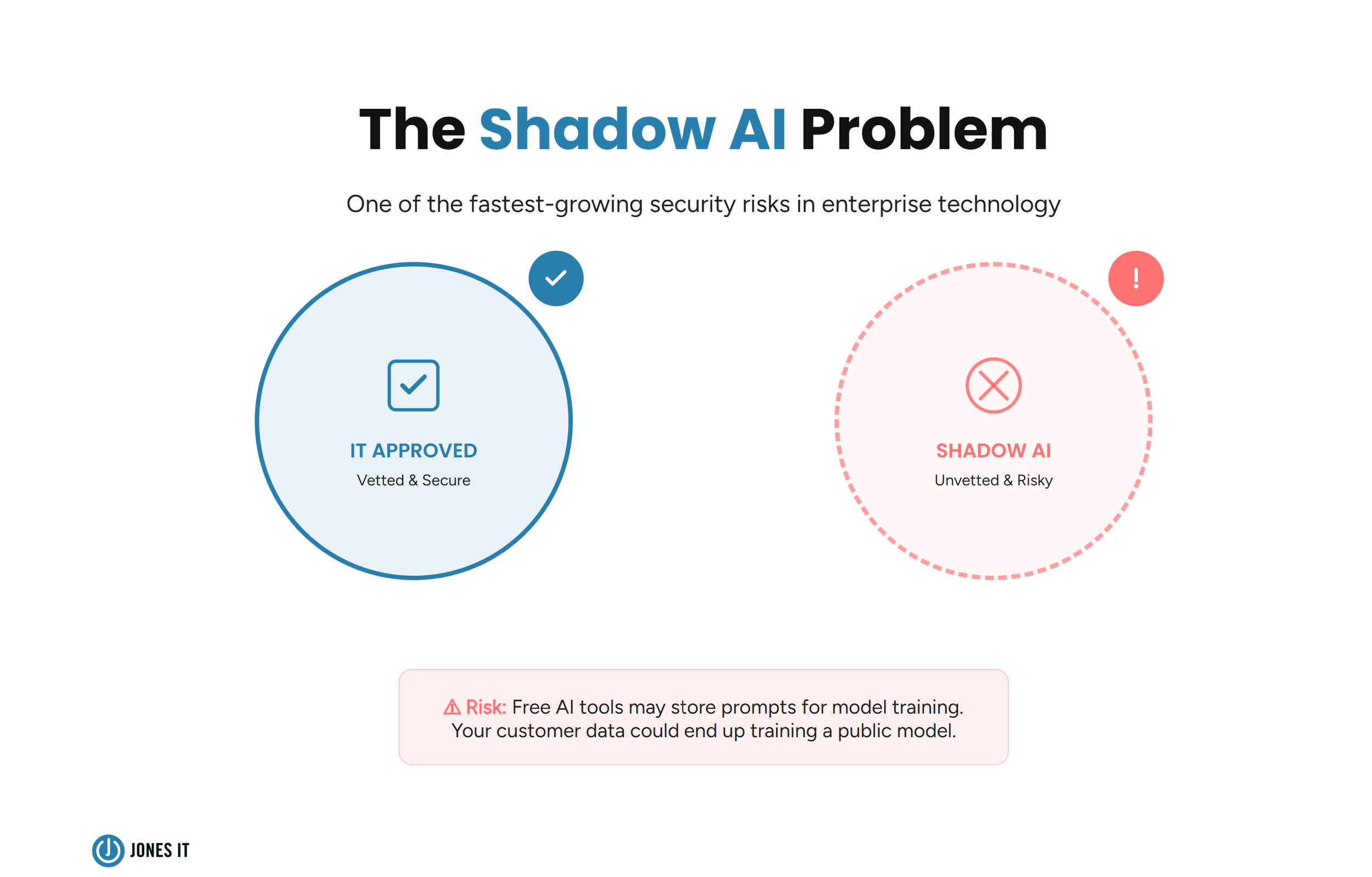

3. The Shadow AI Problem

Here's where most organizations fail: employees must use only authorized AI services that have been vetted by IT.

Shadow AI, unapproved AI tools used without oversight, represents one of the fastest-growing security risks in enterprise technology. Many free AI tools store prompts for model training. That customer data your marketing team uploaded? It might now be training a public model.

Prohibited actions typically include:

Using AI for hiring or evaluation decisions without explicit approval,

Creating deepfakes or synthetic media,

Automating outreach that violates a platform's terms of service, and

Processing data through any tool not on the approved list.

The IT vetting process exists for a reason. It evaluates data handling practices, security certifications, and compliance with regulations like GDPR and CCPA before any tool touches company data.

4. Bias Vigilance

Users should actively watch for biased or discriminatory outputs. AI systems can perpetuate, or amplify, biases present in their training data.

Regular audits are necessary to ensure AI-driven decisions don't unfairly impact individuals based on protected characteristics. This is especially critical for any AI involvement in:

Hiring and recruitment,

Performance evaluations,

Customer credit decisions, and

Service prioritization.

If an AI output seems to disadvantage a particular group, that's a red flag requiring human review before any action is taken.

Security and Data Protection

Protecting sensitive information is the most critical security requirement for AI adoption. Your AI security framework should enforce these requirements without exception.

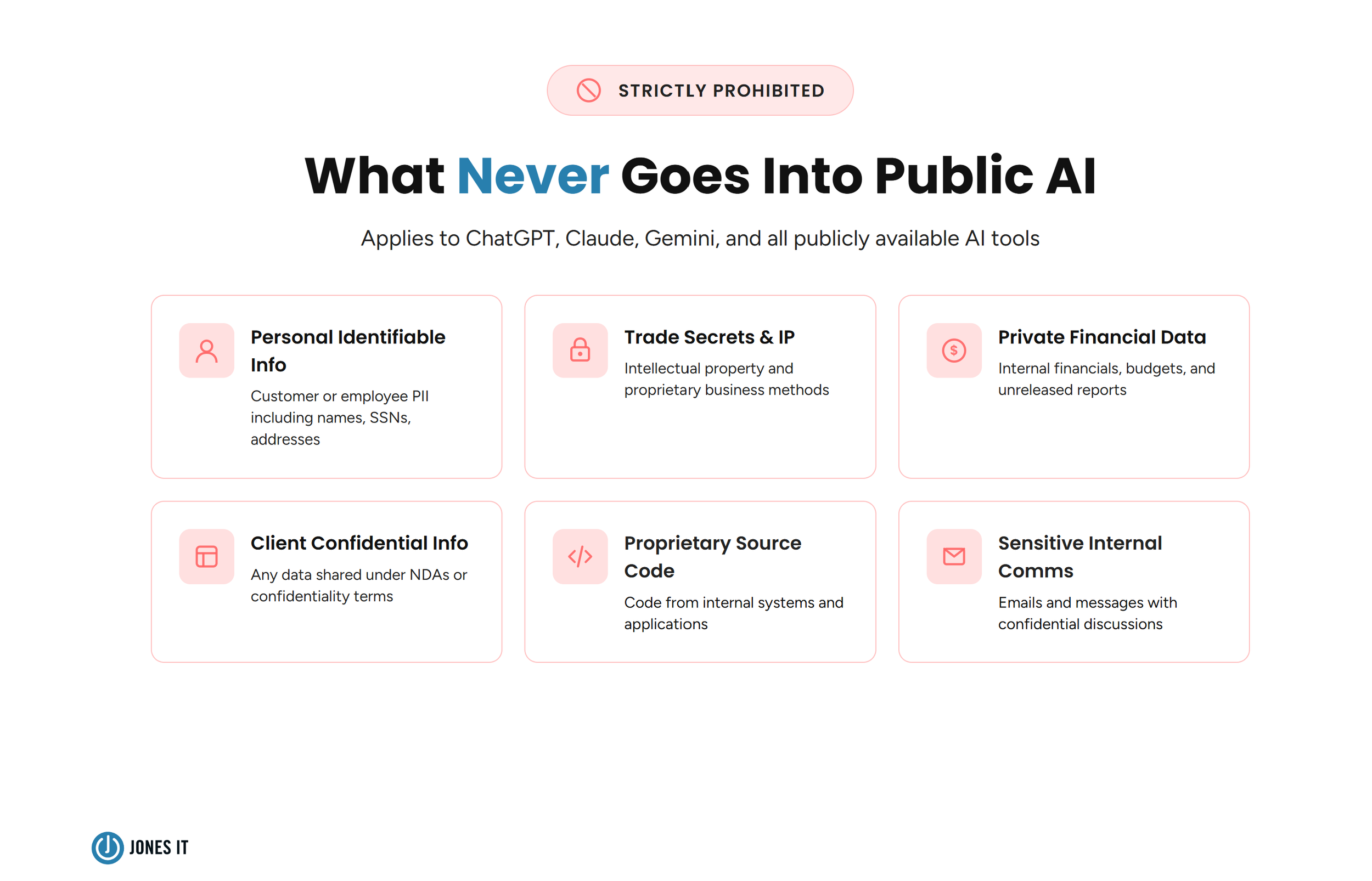

What Should Never Go Into Public AI Tools

Employees must be strictly prohibited from entering the following into any public AI system:

Personally identifiable information (PII) about customers or employees;

Trade secrets and intellectual property;

Private company financial data;

Client confidential information;

Source code from proprietary systems; and

Internal communications containing sensitive discussions.

This applies to ChatGPT, Claude, Gemini, and any other publicly available AI tool, regardless of how secure they claim to be.

Data Protection Best Practices

Anonymization first: Whenever possible, anonymize or pseudonymize data before inputting it into any AI system. This means removing all personally identifying information, such as names and account numbers, before the AI processes the data.

Access controls: Implement multi-factor authentication (MFA), role-based access controls (RBAC), and encrypted communication channels for any AI system handling company data.

Approved channels only: Prioritize using enterprise AI tools that have established data agreements, as these are inherently safer than public alternatives. If your organization has not yet adopted such tools, this needs to be escalated to leadership for discussion.

Building an AI-Ready Workforce

A secure, AI-ready work environment depends on education. Regulations like the EU AI Act increasingly require companies to provide employees with basic knowledge of how AI functions and the associated risks.

What Employees Need to Know

Effective AI training covers:

How AI systems process and potentially store data;

Recognition of AI limitations and failure modes;

Company-specific policies on approved tools and prohibited uses;

Procedures for reporting concerns or policy violations; and

Regulatory requirements affecting their specific role.

Continuous Upskilling

Given the rapid evolution of AI capabilities, organizations must offer continuous resources for upskilling, rather than relying on single, one-time workshops that quickly become obsolete.

Employees who understand AI become better at using it safely. They recognize when outputs seem wrong, know when human judgment must override AI recommendations, and understand why certain data should never touch an AI system.

Regulatory Alignment

Your AI practices must comply with evolving laws, including GDPR, CCPA, and various state-level AI regulations. A comprehensive AI risk management framework helps ensure compliance while enabling beneficial AI use.

How To Make Policies Meaningful

Clear procedures for reporting violations, such as data leakages, harmful outputs, and policy breaches, are essential. Without enforcement, policies become suggestions that employees will simply ignore under productivity pressures.

Violations should carry consequences that are proportional to severity. A first-time mistake using an unapproved tool for non-sensitive tasks requires different handling than deliberately uploading customer PII to a public AI system.

The goal isn't punishment. It's creating accountability that makes everyone take data protection seriously.

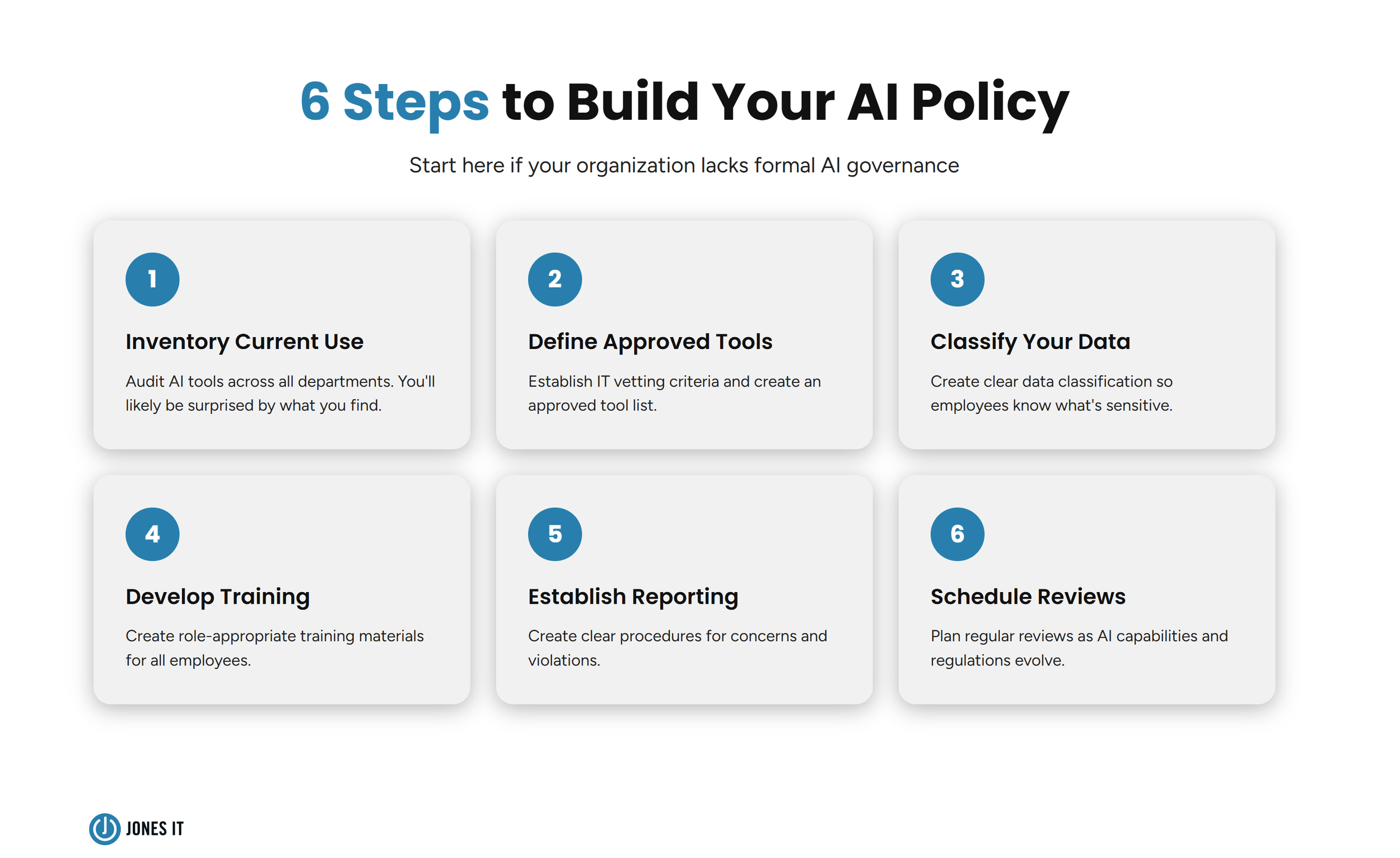

Steps For Building Your AI Policy

If your organization lacks formal AI governance, start here:

Inventory current AI use across departments (you'll likely be surprised).

Define approved tools and establish IT vetting criteria.

Create a clear data classification so that employees know what's sensitive.

Develop training materials appropriate to different roles.

Establish reporting procedures for concerns and violations.

Schedule regular reviews as AI capabilities and regulations evolve.

Successful AI adoption is not about speed. It's about building governance that enables safe scaling. Your employees want to use AI productively, so give them the guardrails that let them do so without putting the company at risk.

Need help implementing AI governance that enables innovation while protecting your organization? Contact us for a consultation on building AI policies that work.