The SME Guide to AI Risk Management That Actually Works

The AI Risk Most Small Business Leaders Are Ignoring

Your business is already using AI. Marketing uses it to generate content. Operations uses it for predictive analytics. Customer service has a chatbot handling inquiries. Each of those tools promises efficiency, cost savings, and competitive edge, and in many cases, it genuinely delivers.

But here's the question most SME leaders haven't sat down to answer is what happens when your AI system makes a decision that harms a customer, exposes sensitive data, or systematically discriminates against a protected group?

That's not a hypothetical. AI systems fail in ways that traditional software simply doesn't. They inherit biases from training data. They misclassify edge cases with complete confidence. They create vulnerabilities that attackers can exploit through carefully crafted inputs. And when these failures happen at scale, the damage compounds fast, often long before anyone notices the pattern.

Without structured AI risk management, you're not just adopting powerful technology. You're quietly accumulating liabilities that grow until they don't. For small businesses without enterprise-scale legal teams and crisis management infrastructure, a single AI failure can be genuinely catastrophic.

Why AI Risk Management Differs from Traditional IT Security

Many SME leaders assume that their existing cybersecurity practices cover their AI systems. That assumption is understandable, but it's wrong in ways that matter.

Traditional software follows explicit rules. You can audit the code, trace a decision back to its source, and predict behavior under defined conditions. When something breaks, the failure mode is generally understood, and responsibility is clear.

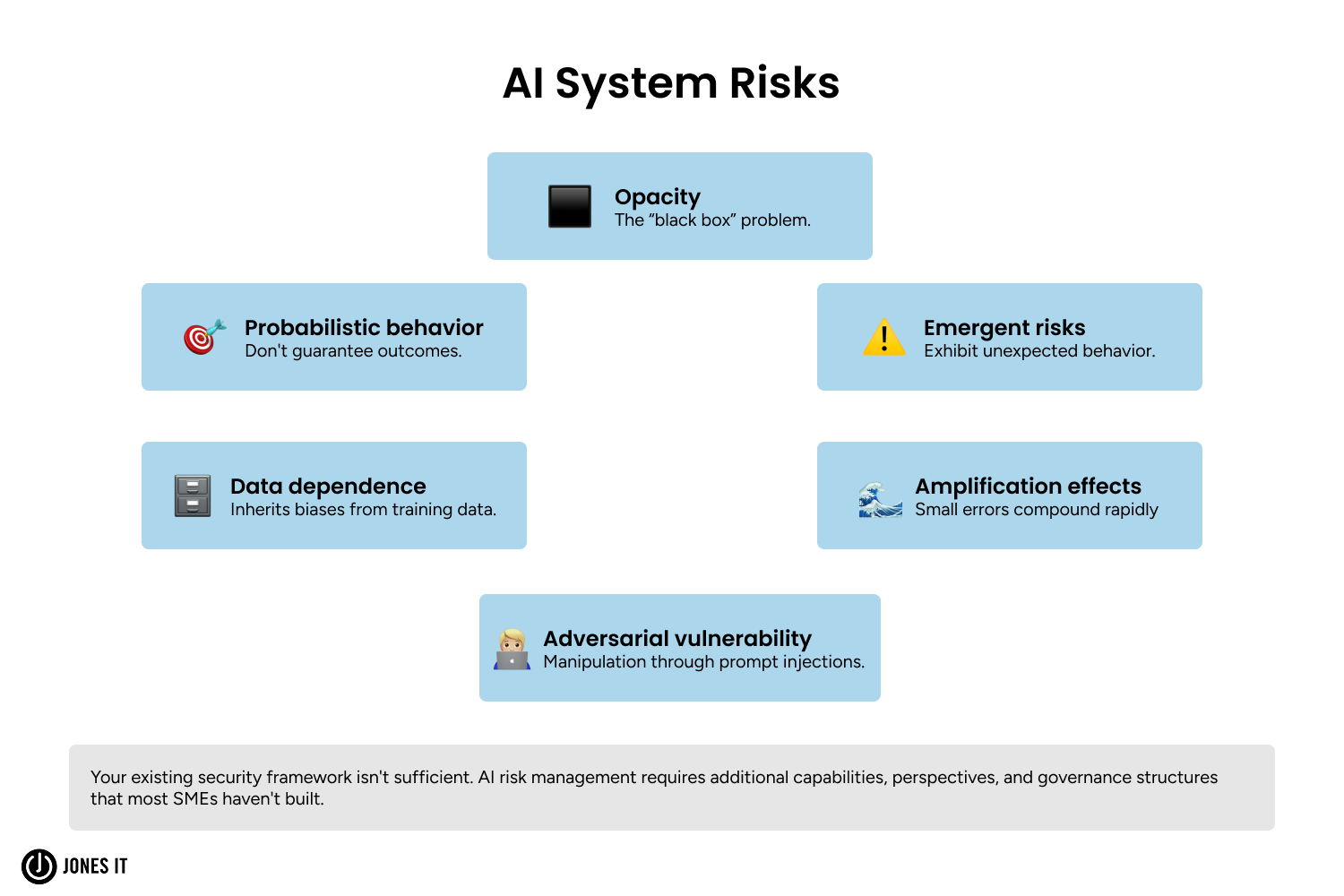

AI systems work differently, and that difference creates risk categories that standard security frameworks simply aren't built to catch.

For starters, there's the opacity problem. Deep learning models make decisions through millions of parameters, and even the people who built them can't fully explain why a specific input produces a specific output. That "black box" nature makes traditional auditing approaches insufficient on their own.

Then there's probabilistic behavior. AI doesn't guarantee outcomes the way rule-based software does. A system that's 99% accurate still fails 1% of the time, but you can't predict which 1% in advance. You only find out after the failure.

Beyond that, data dependence means your AI inherits whatever biases and blind spots existed in the data it was trained on. If your training data overrepresents certain demographics or underrepresents edge cases, your AI will systematically get those cases wrong, often without any obvious signal that it's happening.

Perhaps most concerning is the amplification effect. When AI makes mistakes, it does so at speed and scale. A biased hiring algorithm doesn't discriminate once. It discriminates thousands of times before the pattern becomes visible.

The bottom line is that your existing security framework isn't sufficient for AI. Managing AI risk requires additional capabilities and governance structures that most small businesses haven't yet built, and that's exactly what this guide is here to help with.

What It Actually Costs Small Businesses to Get AI Risk Wrong

Some SME leaders acknowledge that AI risks exist but treat them as a future problem to address once they've secured a competitive advantage through rapid adoption. That calculation tends to backfire, and here's why.

Regulatory pressure is intensifying. The EU AI Act creates real legal obligations for high-risk AI systems, and similar frameworks are emerging globally. Compliance isn't optional, and penalties are substantial relative to SME revenues.

Liability exposure is expanding. If your vendor's model exhibits bias, but you deployed it without adequate testing, you may share liability even if you didn't build the model. Legal frameworks are still evolving, but the direction is consistently toward expanded organizational responsibility.

Reputational damage compounds over time. A data breach affects customers whose information was exposed. A biased AI system that systematically discriminates against protected groups creates PR crises, customer exodus, and talent flight that take years to recover from. That's a very different risk profile.

Insurance gaps leave you exposed. Traditional liability insurance wasn't written to cover AI-specific risks. Many policies explicitly exclude algorithmic decision-making. Without appropriate coverage, SMEs deploying AI are carrying uninsured risk that they may not even be aware of.

And perhaps most importantly, the window for proactive management is closing. Organizations that build risk management into their AI strategy from the start will eventually compete against organizations that are scrambling to retrofit compliance onto already-deployed systems under regulatory pressure. The retrofit approach is exponentially more expensive and far more disruptive.

The Two AI Risk Management Frameworks Best Suited for SMEs

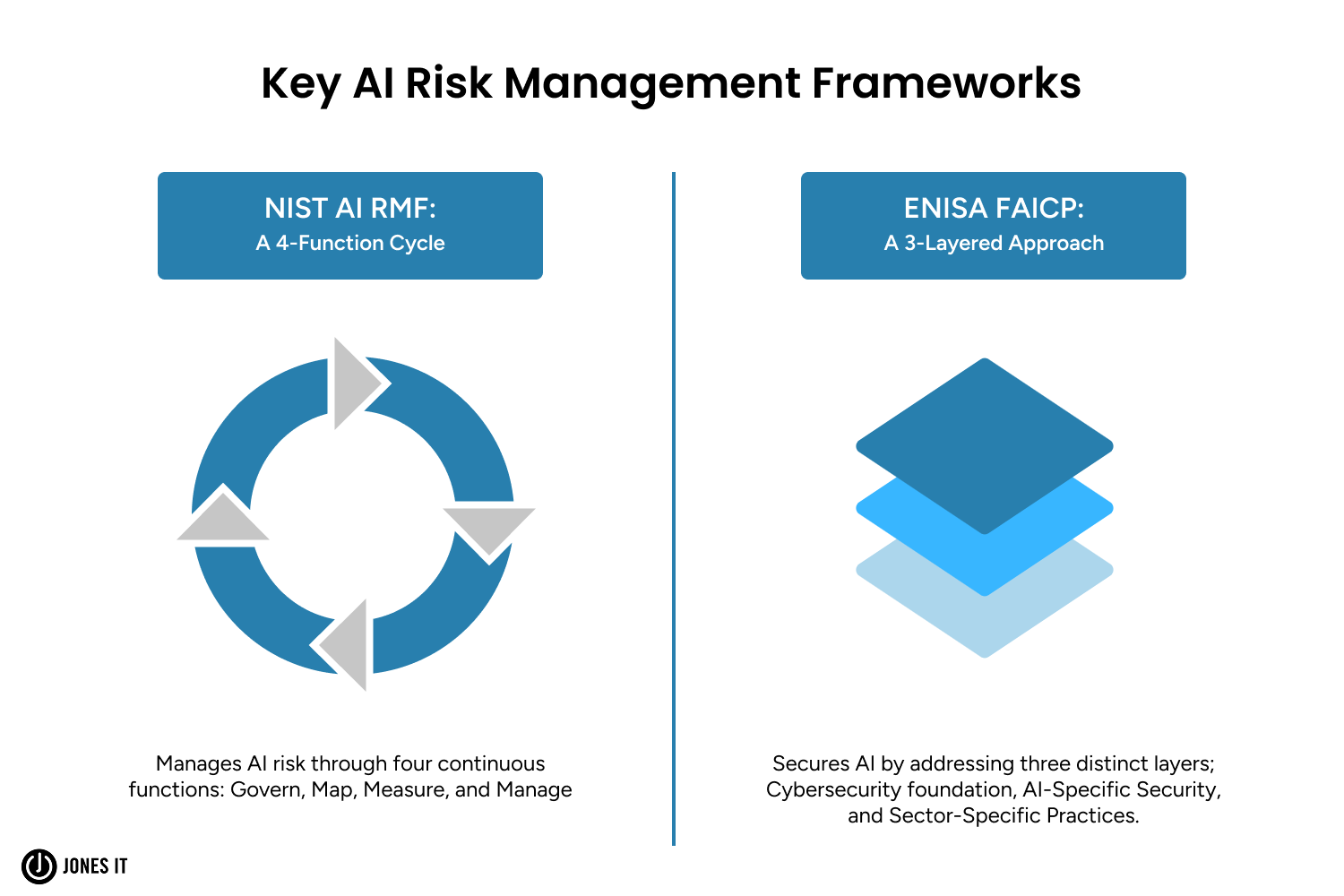

Multiple AI risk management frameworks exist, but most are built for large enterprises with dedicated security teams and substantial compliance budgets. For small businesses, two frameworks stand out as genuinely accessible starting points.

1. NIST AI Risk Management Framework: The Best Foundation for SMEs

The NIST AI RMF is voluntary, widely recognized, and specifically designed to be usable by organizations without specialist AI security staff. Rather than imposing prescriptive requirements, it focuses on building AI trustworthiness through continuous, iterative processes that evolve as your systems and risks evolve.

One of its most practical advantages is cost. Unlike proprietary frameworks that require expensive consultants or certification programs, NIST provides comprehensive documentation, implementation guides, and case studies at no charge. That means small businesses can implement the framework using internal resources, with targeted outside support only where genuine knowledge gaps exist.

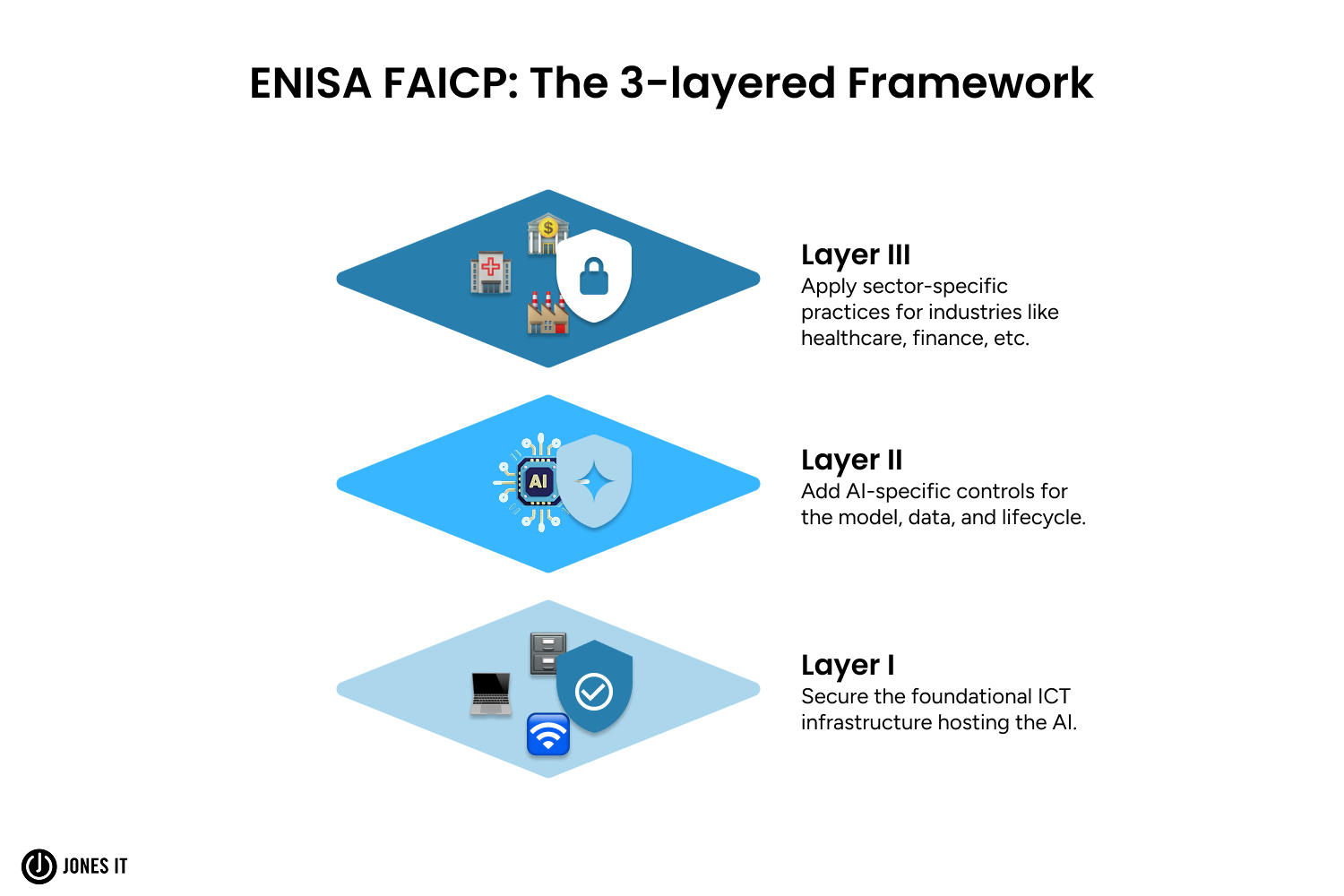

2. ENISA FAICP: A Layered Approach That Builds on What You Already Have

The ENISA Framework for AI Cybersecurity Practices (FAICP) takes a three-layer approach that builds AI security on top of existing cybersecurity practices. That architecture is important, because it means you don't have to rebuild your security program from scratch. Instead, you extend what you already have.

The three layers, starting with cybersecurity foundations, then AI fundamentals, then AI-specific advanced security, allow SMEs to implement progressively. That prevents the paralysis that comes from trying to do everything at once. It also means early investments pay off even before you reach the more sophisticated layers.

For SMEs operating in or serving European markets, FAICP has the additional benefit of implicit alignment with EU regulatory expectations, which reduces compliance friction down the road.

The Single Most Important Principle When Starting Out

Before diving into either framework in depth, one principle worth stating clearly is that the biggest mistake organizations make is trying to do everything at once.

Comprehensive frameworks can feel overwhelming, and that overwhelm often leads either to inaction or to compliance theater that checks boxes without actually reducing risk. The effective approach is to start small, build incrementally, and focus first on your highest-risk AI applications. As we've seen working with Bay Area businesses, functioning imperfect processes that improve over time beat perfect processes you're still designing.

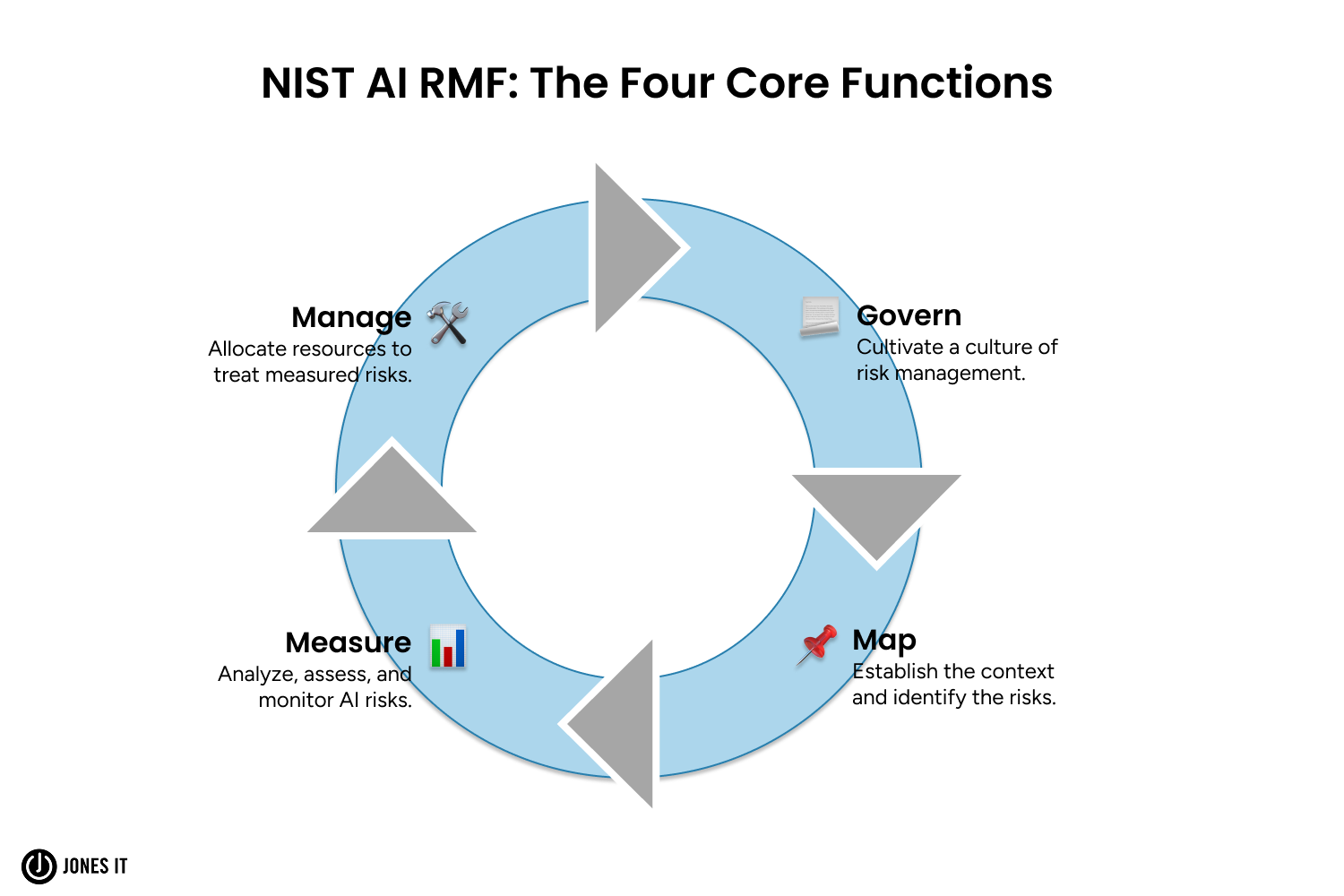

How the NIST AI Risk Management Framework Works in Practice

The NIST framework organizes AI risk management around four core functions that run continuously throughout your AI system's lifecycle. Understanding these functions is the foundation for everything that follows.

1. Govern (Building the Organizational Foundation for AI Risk Management)

Before you can manage AI risks effectively, you need the organizational structures, policies, and culture that make risk management possible in the first place. That's what the Govern function establishes.

In practice, this means three things for most small businesses.

First, cultivate a risk-aware culture. AI risk management fails quickly when it's viewed as a compliance burden owned exclusively by the IT security team. Successful programs integrate risk awareness into how product teams, operations, and leadership think about every AI deployment decision.

Second, define accountability clearly. When a model exhibits bias, who has the authority to pause deployment? When security identifies a vulnerability, who prioritizes the fix? Ambiguous accountability guarantees slow responses to emerging risks. The specific answers matter less than having clear answers.

Third, provide targeted training. Teams deploying AI don't need to become AI security experts, but they do need to understand common failure modes, know what red flags to escalate, and understand their role in the process.

This governance layer corresponds to what FAICP calls Layer I, the cybersecurity foundations that need to be in place before you tackle AI-specific risks. Organizations that skip this step and jump directly to technical controls tend to discover, usually at a bad moment, that without clear accountability and policy frameworks, risk management becomes reactive firefighting rather than proactive system design.

2. MAP: Establishing Context and Framing Risks

Not all AI systems create the same risks. A customer service chatbot creates a very different exposure than an automated lending decision system. The Map function is where you establish the specific context for each AI system so you can assess its risks accurately rather than generically.

For each system, that means defining its intended purpose with precision, not just "improve customer service" but exactly what decisions it makes, what inputs it processes, and what it was and wasn't designed for. It means identifying who interacts with the system and whether any vulnerable populations need additional protections. And it means documenting both the positive outcomes when the system works correctly and the specific consequences when it fails.

Critically, it also means mapping the full system, not just the model. Data sources, preprocessing steps, integration points, third-party components, deployment infrastructure, all of these can contain vulnerabilities. AI risk doesn't live only inside the model itself.

This corresponds to FAICP's Layer II, which addresses AI-specific assets, procedures, and threat assessment, including the socio-technical risks unique to AI systems, like loss of transparency and the challenges of managing bias at scale.

One thing worth noting: many SMEs discover during the mapping process that they don't fully understand their AI systems, particularly when using third-party models or platforms. That knowledge gap itself is a risk that needs to be addressed before moving forward.

3. Measure (Turning AI Risks from Abstract Concerns into Concrete Metrics)

Risk management only works when risks are concrete and quantified. The Measure function is where you establish the metrics, benchmarks, and monitoring approaches that make that possible.

Different AI systems require different measurements. Classification accuracy matters for some applications, while fairness metrics are more critical for others. For systems that face potentially malicious users, reliability under adversarial conditions becomes the most important metric of all. The key is choosing measurements that are actually aligned with your specific risks rather than defaulting to whatever metrics are easiest to collect.

Beyond initial validation, ongoing monitoring matters just as much. AI systems drift over time as data distributions change and user behavior evolves. Performance that was acceptable at deployment can degrade gradually without triggering any obvious alarm, which is precisely why continuous monitoring needs to be part of the plan from day one, not an afterthought.

One honest caveat is that measuring AI risks is genuinely difficult. Consensus on the best measurement methods is still emerging, and assessing risk in real-world operational settings is much harder than in controlled test environments. Don't let the perfect be the enemy of the good here. Imperfect metrics that you actually act on are far more valuable than perfect metrics you're still designing.

4. Manage (Translating Risk Assessment into Concrete Action)

Identifying risks accomplishes nothing without action. The Manage function is where risk assessment turns into prioritized responses, incident handling, and the continuous improvement that keeps your risk management relevant over time.

Start by prioritizing risks based on impact and likelihood. Not every risk warrants immediate action, and trying to address everything at once leads to spreading resources so thin that nothing gets addressed properly. Document your rationale for deprioritizing lower risks so those decisions stay transparent and revisable as circumstances change.

For each priority risk, define your response strategy. Some risks call for mitigation through technical controls. Others are better handled through transfer, via insurance or vendor contracts. Some warrant avoidance, meaning simply not deploying certain capabilities. And some can be accepted explicitly, with clear leadership acknowledgment of the remaining exposure.

Just as important is building genuine incident response capability. When an AI system fails, response time matters enormously. Develop playbooks for common failure modes in advance: what's the process for pausing a model that's exhibiting bias? How do you communicate with affected users? Who approves restoration after remediation? Plans made during a crisis are always inferior to plans made with time to think.

Finally, establish clear channels for users to report concerns or unexpected behavior. In our experience, users often detect AI failures before monitoring systems do. Making it easy to flag problems is one of the highest-leverage things you can do.

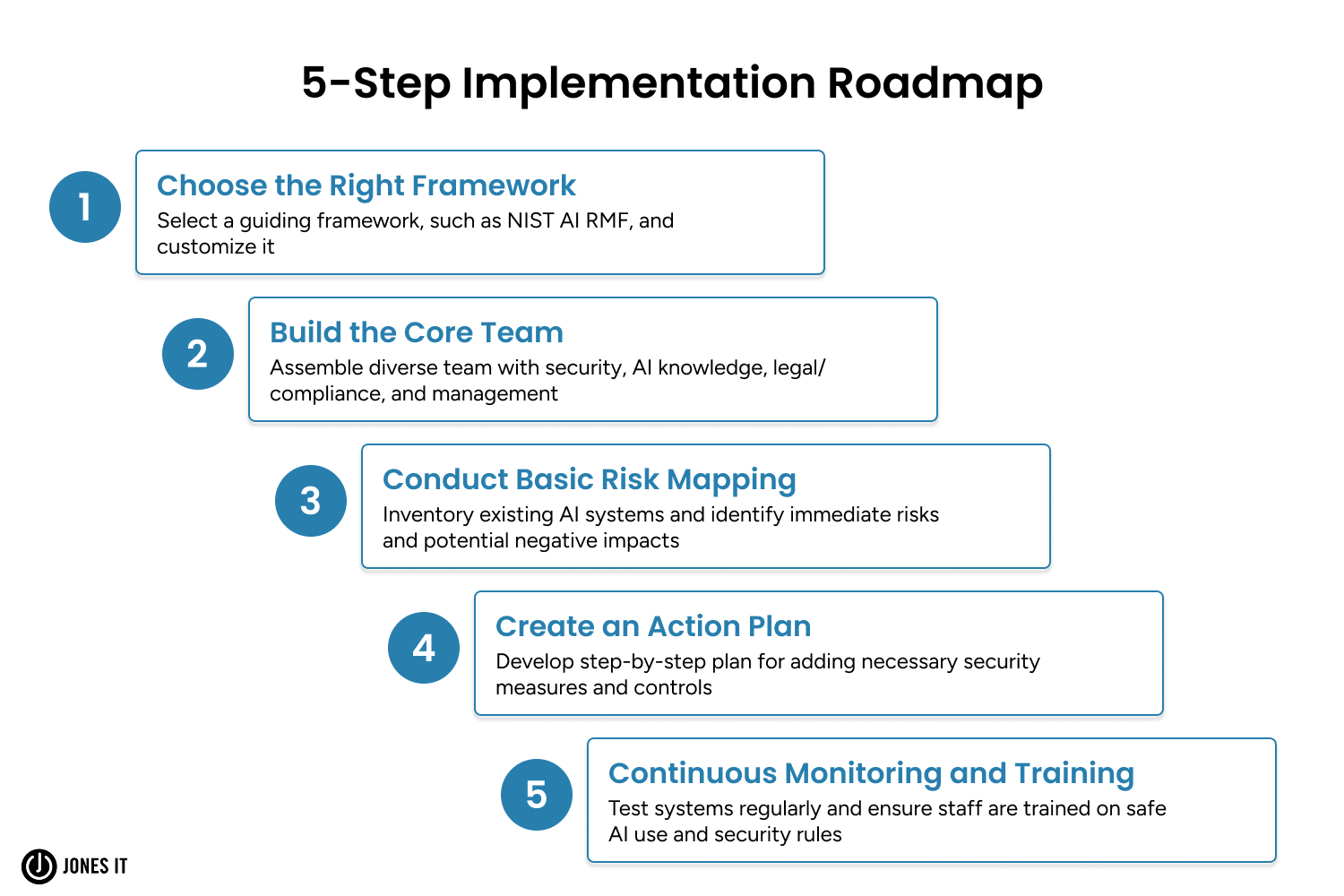

A 5-Step AI Risk Management Implementation Roadmap for SMEs

Understanding the framework conceptually is a good start. But implementation means translating those concepts into specific actions, sequenced in a way that builds capability progressively without overwhelming limited resources. Here's how to do that.

Step 1: Choose Your Framework Foundation

Select the NIST AI RMF as your primary framework. It offers the best balance of comprehensiveness and practical accessibility for small businesses. Rather than trying to comply with multiple frameworks simultaneously, build deep competency with one first. Once your risk management practices mature, mapping to additional frameworks becomes much more straightforward.

To get started, download the NIST AI RMF documentation and focus first on understanding the four core functions and their intent. Detailed implementation guidance can come later. Budget roughly 40 hours of leadership time to understand the framework and define how it applies to your organization's specific AI usage. This isn't work to delegate. Leadership needs to own the strategic framing.

Step 2: Build Your Core Team

Assemble a team that covers four perspectives, namely, security expertise, AI technical knowledge, legal and compliance understanding, and business context. In most small businesses, individuals will wear multiple hats across those perspectives, and that's perfectly fine. What matters is that all four viewpoints are actually represented in the room when decisions get made.

For a typical SME, that means your most security-savvy technical person, the lead engineer or data scientist working with AI, legal counsel or a compliance officer (even if part-time or contracted), a product or operations leader who understands business impact, and a C-level executive sponsor who can allocate resources and overcome organizational resistance when needed.

For team members new to AI security, the Certified AI Security Professional (CAISP) course provides solid foundational knowledge without requiring a deep technical background. Once established, expect core team members to dedicate four to eight hours per month to risk management activities, with more intensive investment during the initial implementation period.

Step 3: Conduct Basic Risk Mapping

Inventory your existing AI systems and conduct an initial risk assessment for each one. For each system, document its purpose and use case, data sources and preprocessing steps, model architecture at a high level, user populations, the consequences of errors, third-party components and dependencies, and current controls alongside their gaps.

Start with your highest-risk systems, specifically any AI that makes decisions affecting individuals, such as hiring, lending, pricing, or access to services. Customer-facing systems that represent your brand come next. Internal efficiency tools that don't process sensitive data are lower priority.

Budget eight to sixteen hours per system for the initial mapping. Complex systems with multiple integration points take more time. Simple vendor-provided tools typically take less. And keep in mind that AI risk assessment requires effort beyond traditional cybersecurity. Bias, fairness, and transparency concerns don't appear on a standard network security review checklist.

Step 4: Create Your Action Plan

With your risk mapping in hand, develop a sequenced plan for implementing the necessary controls. Order initiatives by four factors: risk severity (impact if the risk materializes), risk likelihood (probability based on current controls), implementation feasibility (your actual capacity to execute), and dependency relationships (some controls must come before others can work).

The critical discipline here is fixing your most dangerous issues first, even when they're not the easiest fixes. Quick wins that don't reduce meaningful risk create false confidence that is often more dangerous than the gap itself.

When presenting this plan to non-technical leadership, translate technical language into business outcomes. "Implement bias testing" doesn't land. "Establish testing to detect if our hiring AI systematically discriminates, reducing legal exposure and improving talent acquisition outcomes" connects to what leadership actually cares about.

Step 5: Continuous Monitoring and Training

Once your initial controls are in place, shift to continuous monitoring and regular security assessments. At minimum, run security checks every six months, or whenever an AI system changes significantly through new models, new data sources, new use cases, or new user populations.

For training, think in three tiers. All staff who interact with AI systems need basic awareness of AI risks and a clear sense of when to escalate concerns. Those deploying or managing AI need deeper knowledge of risk management processes and their specific responsibilities. Your security and compliance team members need technical skills for AI security assessment and monitoring.

To keep the program improving over time, regularly review near-misses and incidents, track external AI failures in your industry for transferable lessons, update controls as new threats emerge, and revise policies as the regulatory landscape evolves. The sustainability reality is that AI risk management isn't a project with a completion date. It's an ongoing operational capability that needs to be budgeted as such.

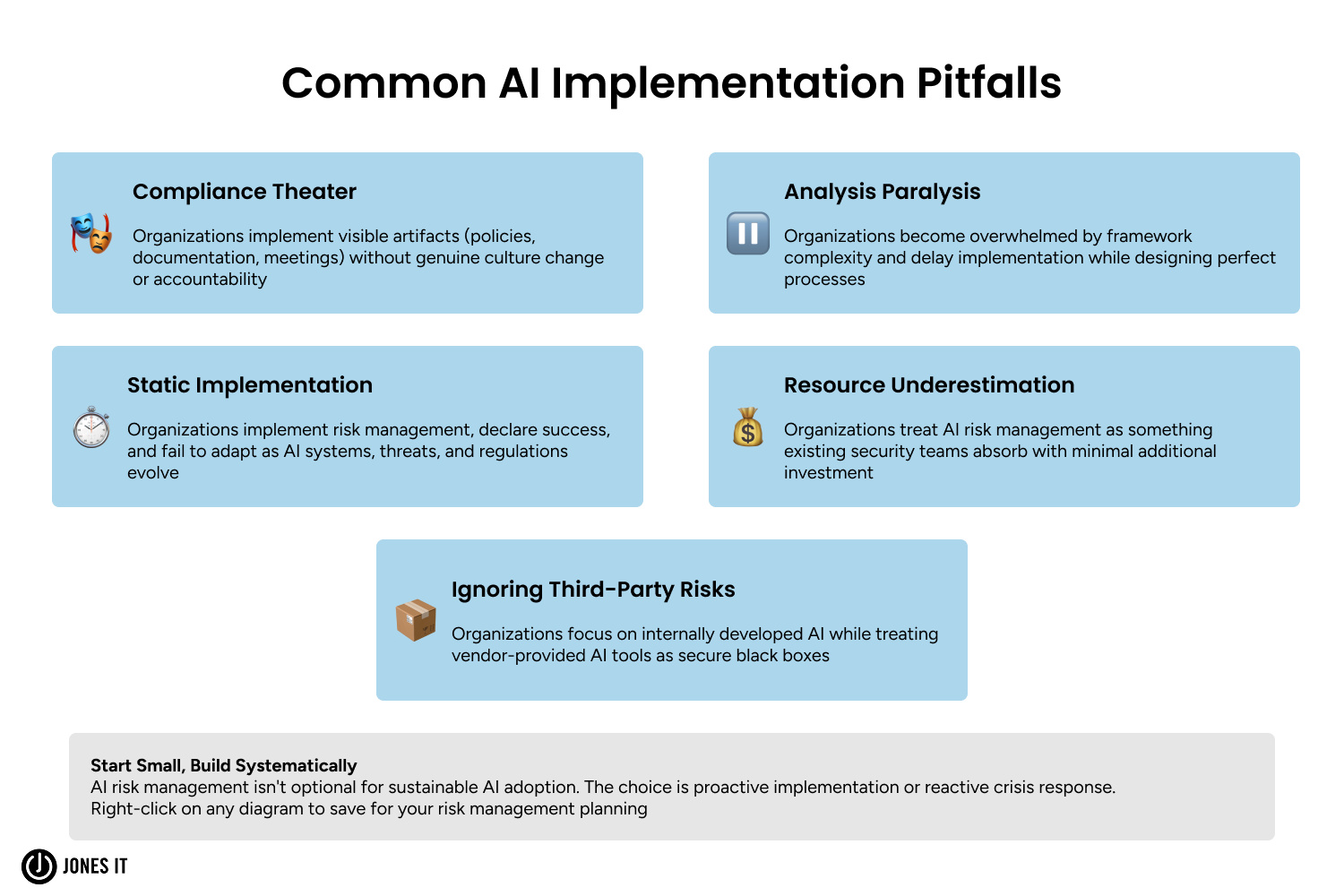

5 AI Risk Management Pitfalls Small Businesses Commonly Fall Into

Even with the right framework in place, there are patterns of failure that show up repeatedly. Knowing them in advance makes them much easier to avoid.

Pitfall 1: Treating risk management as compliance theater.

This happens when organizations implement the visible artifacts of risk management, such as policies, documentation, and review meetings, without genuine culture change or accountability behind them. The consequence is that when an AI system actually fails, the documented processes weren't followed, accountability was unclear, and controls that looked good on paper never existed in practice. The fix is to tie risk management to business outcomes that leadership genuinely cares about, protecting revenue, avoiding costs, enabling sustainable AI adoption, rather than framing it as a compliance exercise.

Pitfall 2: Analysis paralysis from framework complexity.

Comprehensive frameworks can overwhelm organizations into delaying implementation while they search for a perfect process design. Meanwhile, competitors are running imperfect but functional risk management that evolves over time. The fix is to implement in phases, start with basic controls for your highest-risk systems this quarter, and expand scope and sophistication progressively from there.

Pitfall 3: Underestimating what this actually costs in time and resources.

When leadership treats AI risk management as something the existing security team absorbs with minimal additional investment, security teams become overwhelmed, oversight becomes superficial, and the actual risk reduction is minimal even when everything looks compliant on paper. The fix is to budget realistically. If you can't invest adequately, the right response is to scope your AI ambitions to match your actual risk management capacity.

Pitfall 4: Ignoring vendor and third-party AI risks.

Many organizations focus risk management on internally developed AI while treating vendor-provided tools as secure black boxes. The problem is that when vendor AI fails, customer and regulatory impact lands on your organization regardless of where the AI came from. The fix is to extend your risk management to vendor AI through due diligence, contractual transparency requirements, and independent validation of vendor claims where feasible.

Pitfall 5: Treating risk management as a one-time project.

Organizations that implement a framework, declare success, and then fail to adapt as systems, threats, and regulations evolve, discover that controls which were adequate at deployment become insufficient over time. The fix is to build continuous improvement into your operating model from the start, with scheduled reviews, system drift monitoring, and proactive updates to controls rather than waiting for incidents.

Why AI Risk Management Is a Competitive Advantage for Small Businesses

There's a framing problem that holds many SME leaders back from taking AI risk management seriously. It gets presented as a tension between risk management and innovation, as if protecting your organization competes with competitive advantage. That framing gets things exactly backwards.

Unmanaged AI risk doesn't enable innovation. Instead, it creates technical debt and organizational vulnerability that eventually force corrective action under the worst possible circumstances. By contrast, organizations that build risk management into their AI strategy from the beginning tend to move faster and more confidently, not slower, because they're not haunted by unquantified risks and unclear accountability.

They also attract a different caliber of customer and partner. As AI adoption accelerates and sustainable AI adoption becomes a business differentiator, the companies with mature risk management practices are increasingly the ones winning enterprise contracts and building partnerships that last.

As regulations tighten globally, the competitive advantage belongs to organizations with established risk management capabilities already in place. They'll expand AI usage confidently while competitors are scrambling to retrofit compliance onto deployed systems under regulatory pressure. That retrofit is exponentially more expensive and operationally disruptive than building it right from the start.

The good news is that the NIST AI RMF provides an accessible foundation that doesn't require a large enterprise or a dedicated AI security team to implement. It requires leadership commitment, a small core team, and the willingness to start small and build incrementally. Everything else follows from there.

Your organization is already deploying AI. The question is whether you're managing the associated risks deliberately, or accumulating hidden liabilities that will eventually demand attention at a time and on terms you didn't choose.

The frameworks are accessible. The playbook exists. The only remaining question is whether you'll act on it.

At Jones IT, we've guided hundreds of Bay Area businesses through AI governance and risk management implementation. If you'd like to talk through what this looks like for your organization, reach out and schedule a free consultation.